A Deep Dive into the Comparison of Mistral, Mixtral, and Llama 2: Unraveling the State-of-the-Art Language Models

Introduction

The field of natural language processing has witnessed remarkable advancements with the advent of large language models (LLMs). Among these models, Llama, Mistral, and Mixtral stand out as cutting-edge creations developed by renowned researchers. This blog post delves into a comparative analysis of these three models, exploring their differences in performance, architecture, and use cases.

Performance Assessment

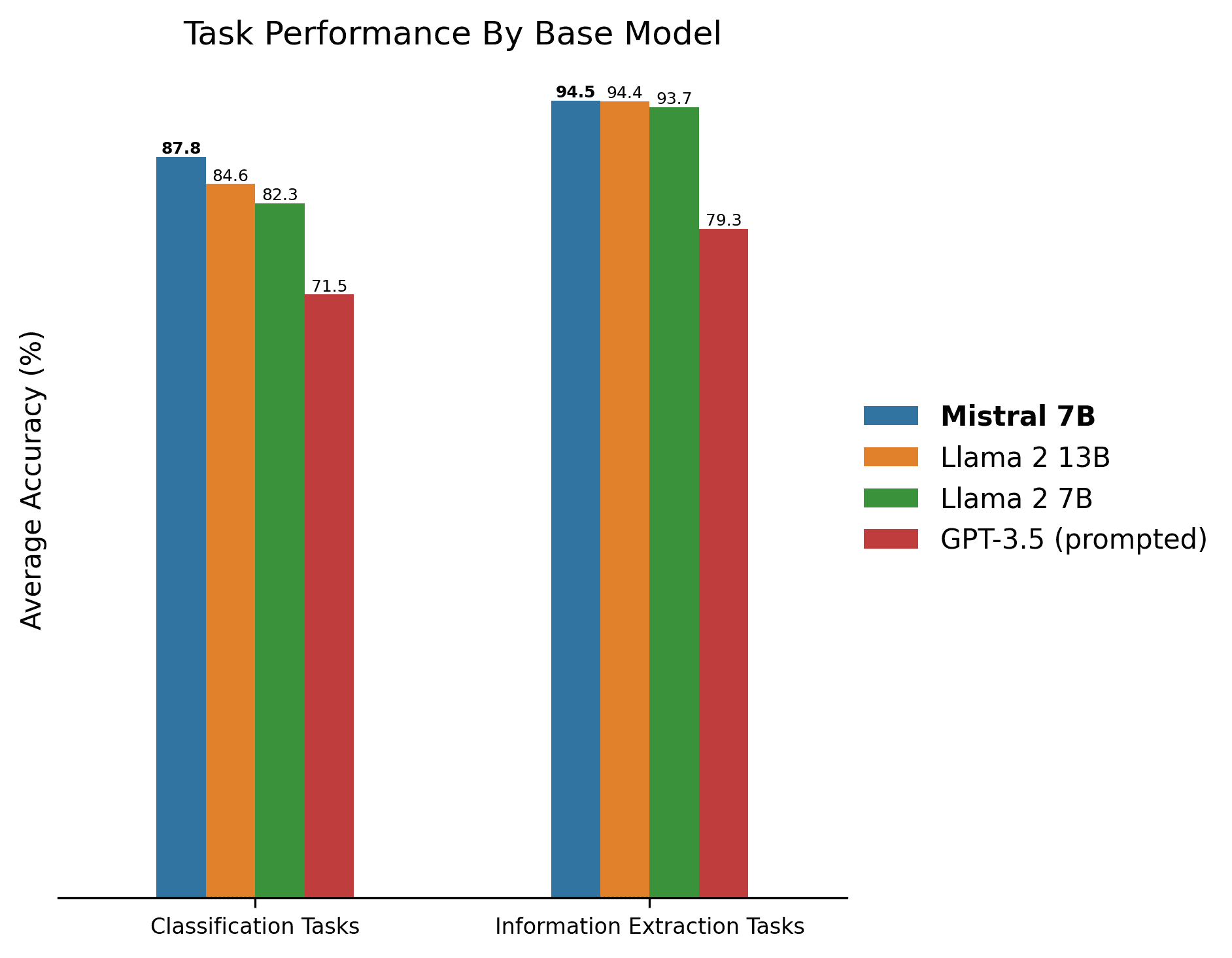

Mistral 7B, the most advanced iteration of the Mistral family, outperforms Llama2-13B on a wide range of benchmarks and tasks. In terms of perplexity, sequence generation, and question answering, Mistral 7B consistently achieves lower loss values and higher accuracy scores.

Architectural Differences

At their core, Mistral and Llama 2 differ in their architectural design. Mistral employs a transformer-based architecture with self-attention mechanisms, while Llama 2 utilizes a recurrent neural network (RNN) architecture. This fundamental distinction impacts their performance and efficiency characteristics.

Use Cases and Applications

The strengths of each model determine their suitability for specific applications. Mistral 7B excels in tasks that require high precision and fluency, such as language translation, dialogue generation, and summarizing. Llama 2, on the other hand, is better suited for tasks involving long-term dependencies and sequential reasoning, like question answering and sentiment analysis.

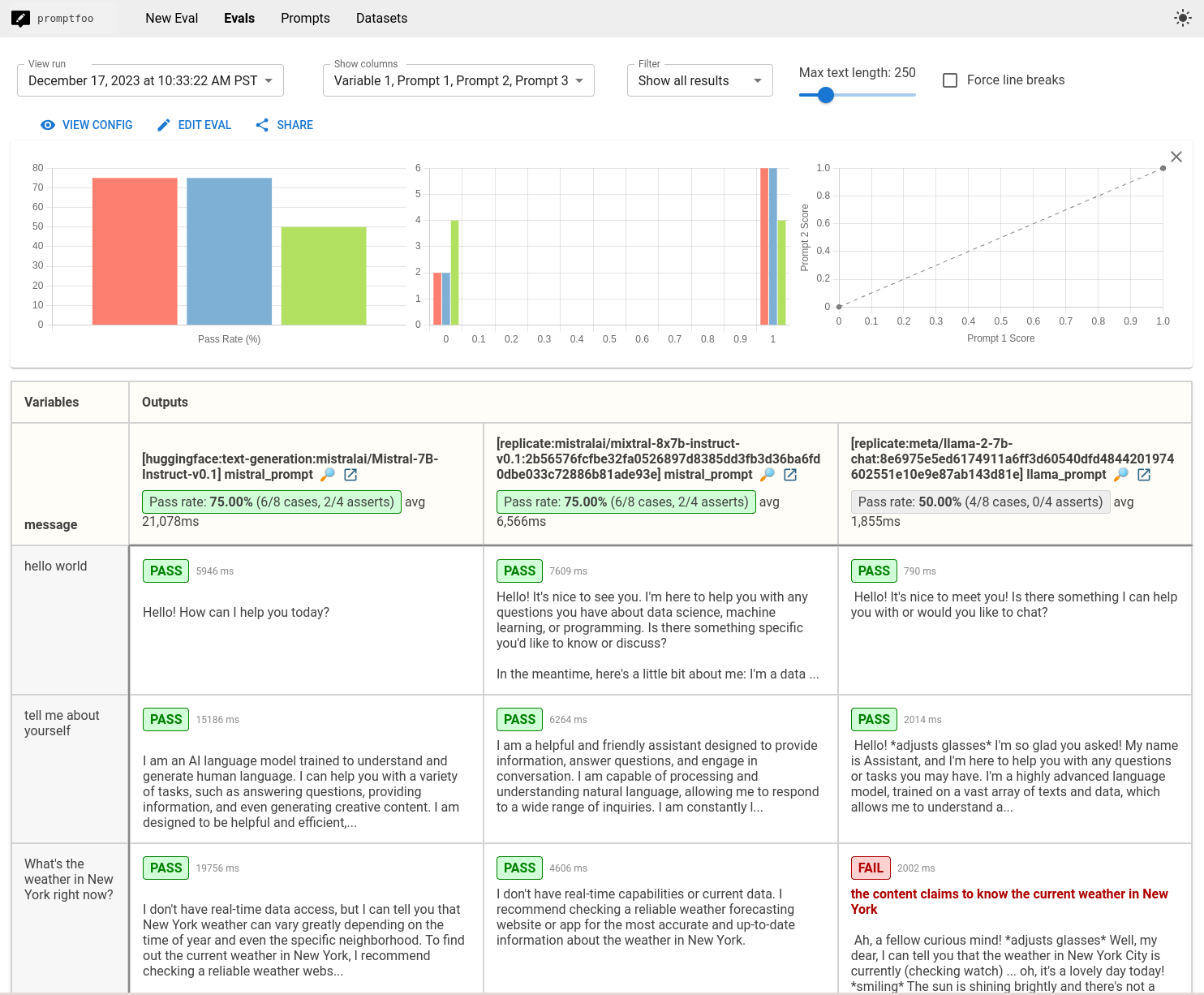

Comparative Evaluation Tool: Promptfoo CLI

To facilitate the comparison of Mistral, Mixtral, and Llama 2, we recommend using the Promptfoo CLI. This tool allows developers to set up prompts, adjust parameters, and evaluate model performance on various tasks.

Conclusion

Mistral, Mixtral, and Llama 2 represent the leading edge of LLM development. Each model offers unique advantages and drawbacks, making them suitable for different applications. By understanding their performance, architecture, and use cases, developers can leverage these models effectively to unlock new possibilities in natural language processing.

Openpipe

Promptfoo

Comments